Stream Data Analytics

Architecture Types, Toolset, and Results

In data analytics since 1989, ScienceSoft helps companies across 30+ industries build scalable, low-latency stream analytics solutions to enable business agility in decision-making and risk mitigation.

80% of Companies Report Revenue Increase Thanks to Stream Data Analytics

According to the 2022 KX & CEBR survey, 80% of participants achieved a significant revenue increase after implementing stream data processing and analytics. The survey features feedback from 1,200 companies in the manufacturing, automotive, BFSI, and telecommunications domains and spans the US, UK, Germany, Singapore, and Australia. The revenue drivers include timely detection of operational and financial anomalies, significant improvement of business processes, and reduction in non-people operational costs.

At the same time, almost 600 executives and technical specialists surveyed for The State of the Data Race Report by DataStax agree that real-time data processing and analytics have a transformative impact on business. The key metrics positively affected by the technology include revenue growth, customer satisfaction, and market share.

Types of Stream Analytics Architectures

Stream analytics is used for real-time processing of continuously generated data. Lambda and Kappa architecture designs are optimal for building scalable, fault-tolerant streaming systems. The choice between the two depends on your analytics purposes and use cases, including the approach to combining real-time streaming analytics insights with a historical data context.

Lambda architecture

The Lambda architecture features dedicated layers for stream and batch processing that are built with different tech stacks and function independently. The stream processing layer analyzes data as it arrives and is responsible for real-time output (e.g., abnormal heart rate or blood pressure alert during remote patient monitoring). The batch processing layer analyzes data according to the defined schedule (e.g., every 15 minutes, every hour, every 12 hours) and enables historical data analytics (e.g., patterns in heart rate fluctuations, what-if models for trading risk assessment). On top of the two layers, there is a serving layer (a NoSQL database or a distributed database) that combines real-time and batch data views to enable real-time BI insights and self-service data exploration.

|

|

|

|

|

|

Best for: businesses that need to combine real-time insights and analytics-based actions with in-depth historical data analytics. |

|

|

|

|

|

Lambda pros

- High fault tolerance: even if there is data loss at the stream processing layer, the batch layer still holds all the historical data. In addition to this, each layer has its own redundant layer for even more reliability.

- Possibility of in-depth data exploration in search of patterns and tendencies.

- Can enable efficient training of machine learning models based on vast historical data sets.

Lambda cons

- May require extra efforts to avoid data discrepancies caused by differences in the processing time of the two layers.

- Comparatively more difficult and costly to develop due to the tech stack diversity.

- More challenging to test and maintain.

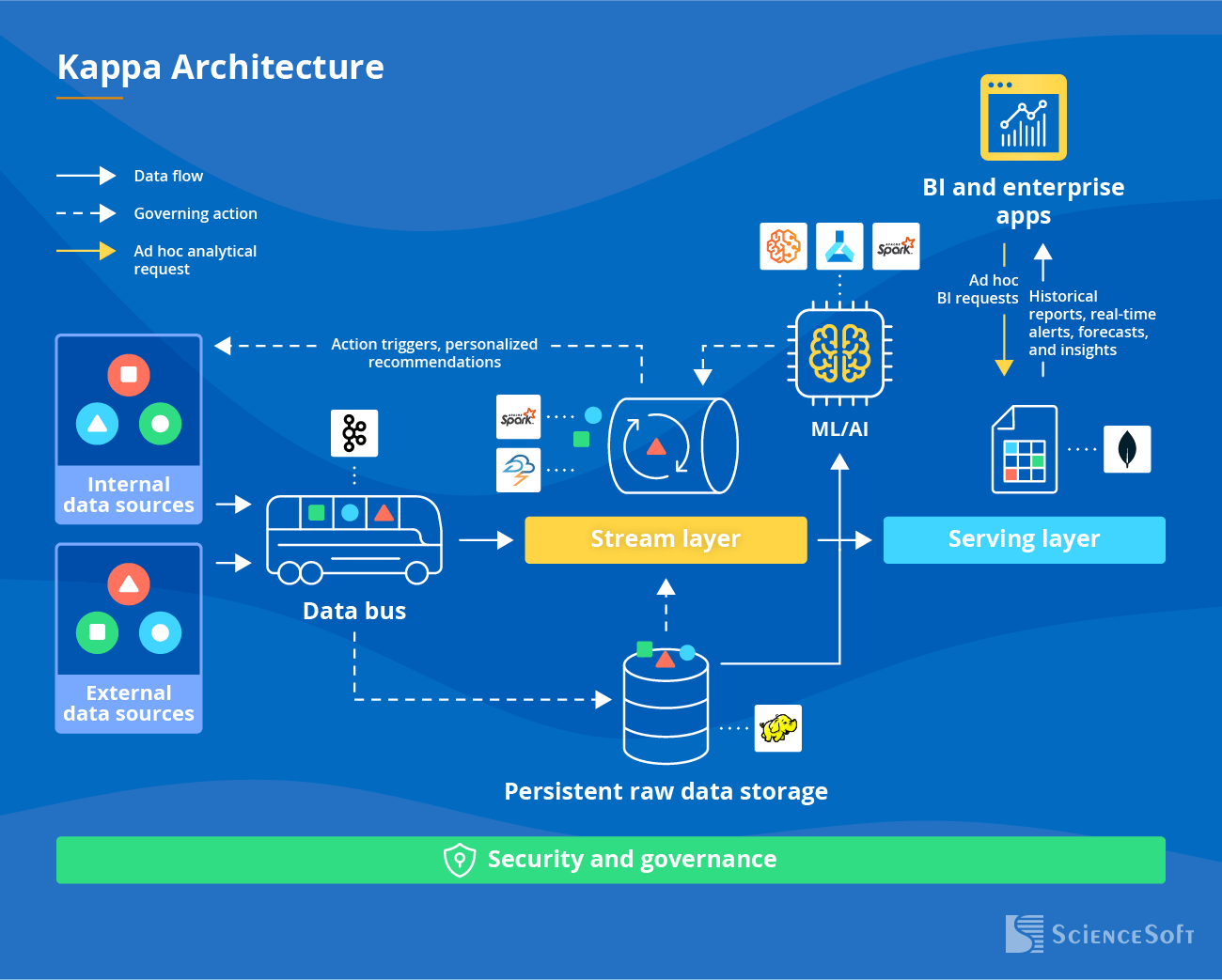

Kappa architecture

In Kappa architecture, real-time and batch analytics are enabled by the stream layer. Both processes rely on the same technologies. The serving layer gets a unified view of analytics results from real-time and batch pipelines.

|

|

|

|

|

|

Best for: systems that must provide low-latency analytical output and feature historical analytics capabilities as a complementary component (e.g., financial fraud detection systems, online gaming platforms). |

|

|

|

|

|

Kappa pros

- Potentially cheaper to implement due to a single tech stack.

- Cheaper to test and maintain.

- Higher flexibility in scaling and expanding with new functionality.

Kappa cons

- Lower fault tolerance in comparison to Lambda due to only one processing layer.

- Limited capabilities for historical data analytics, including ML model training.

Tech and Tools to Build a Real-Time Data Processing Solution

With expertise in multiple techs like Hadoop, Kafka, Spark, NiFi, Cassandra, Mongo DB, Azure Cosmos DB, Azure Synapse Analytics, Amazon Redshift, Amazon DynamoDB, Google CloudDatastore, and more, ScienceSoft chooses a unique set of tools and services to ensure the optimal cost-to-performance ratio of a stream analytics solution in each particular case.

See How ScienceSoft Implemented Stream Analytics for Our Customers

11 results for:

ScienceSoft’s Competencies and Experience

- Since 1989 in data analytics.

- Since 2003 in end-to-end big data services.

- A team of business analysts, solution architects, data engineers, and project managers with 5–20 years of experience.

- Practical experience in 30+ domains, including healthcare, banking, lending, investment, insurance, retail, ecommerce, manufacturing, logistics, energy, telecoms, and more.

- In-house compliance experts in GDPR, HIPAA, PCI DSS, SOC 1/2, and other global and local regulations.

- Partnerships with AWS, Microsoft, and Oracle.

- ISO 9001 and ISO 27001-certified to guarantee top service quality and complete security of our customers' data.

Consider ScienceSoft to Support Your Stream Analytics Initiative

To deliver projects on time, on budget, and within the agreed scope, we follow our established project management practices that allow us to efficiently overcome time, budget, and change request constraints.

The cost of implementing a stream analytics solution may vary from $200,000 to $1,000,000+, depending on the solution's complexity. Use our online calculator to get a ballpark estimate for your case. It's free and non-binding.

Get a ballpark cost estimate for your stream analytics solution.

Schedule a meeting

Schedule a meeting

Schedule a meeting

Schedule a meeting